Prism from Microsoft Patterns & Practices leans on an Inversion of Control (IoC) container. While nominally indifferent to your choice of container, you have to work a bit to extricate yourself from its default choice which is Microsoft Unity. More so with some containers than others.

The sweat and tears flow in the “Bootstrapper” class with which you launch your application. Most Prism samples feature a Bootstrapper that inherits from “UnityBootstrapper”. “UnityBootstrapper” busies itself with registering and configuring a dozen or so Prism components in a manner characteristic of Unity and it unapologetically takes a hard dependence on Unity itself. You are welcome to write your own Bootstrapper base class from scratch; of course this entails reading and understanding what “UnityBootstrapper” does so you can reassure yourself that your replacement achieves the same effects. Who has time for that?

Almost a year ago I found myself writing a single application with three different client technologies, each dependent upon its own container. The three diverged in minor details – each was a different incarnation of a Container + ObjectBuilder – but the differences were sufficiently great that I felt compelled to unleash the Prism base bootstrapper class from its Unity chains.

Preoccupied with more important business, I took the most expeditious route. I salvaged as much of Prism’s UnityBootstrapper as I could while replacing its direct dependency on Unity library references.

Prism initialization is not something I want to own. When P&P updates its UnityBootstrapper, I want a quick, no-thought pathway to update my IoC-agnostic version.

The result was Cal.Bootstrapper (“CAL” is short for “Composite Application Library”, Prism’s official name) and some supporting types. Cal.Bootstrapper looks like a cleaned-up UnityBootstrapper. It goes through the same motions of registering types and configuring instances albeit with reference to a denuded IoC, shorn of all but the most minimal functionality. This, dear reader, will be my gift to you.

Recently, a few folks have wondered about replacing Unity with a completely different IoC container. The one I hear most often is StructureMap. Its author, Jeremy Miller, has several times entertained the notion of replacing “UnityBootstrapper” with something that reflects glory on StructureMap. Apparently he has better things to do. I guess I’ll tackle it.

The Goods

I’ve bundled everything described in this post into a 2 meg zip, PrismRI-Ioc.zip, downloadable from my company website. Do with it as you will.

Please note: I have no intention of maintaining this; don’t even ask.

No, This is Not the Right Way

My knowledge of StructureMap is deeply impoverished but, from the little I do know, I’m certain that the “proper” course is to abandon “UnityBootstrapper” altogether. But there’s a reason Jeremy hasn’t tackled this and no one seems to have done so for other IoC products either.

Nicholas Blumhardt, author of Autofac, took a crack at integrating Prism with his IoC back in August, 2009. He wanted to do it “the right way,” completely rethinking how a Prism app is bootstrapped and how application modules are written. He describes it here as a “work-in-progress”. I guess it took a little more work than he thought it would and he must have found something better to do because it doesn’t look like he finished.

I hoped to avoid this “road to good intentions” by doing it the “the easy way” which may be the “wrong way” but it works … which beats something that doesn’t.

I decided to change as little of the Unity-oriented Prism app approach as I could. I want to get something done. I want you to be able to convert your running Prism app with minimal effort.

Accordingly, my “Cal.Bootstrapper” mimics the “UnityBootstrapper” and it wallows in the style and ceremony that other IoC products despise.

Is it all that bad? Why am I changing my IoC container anyway? I ought to have a clear idea about what I want from an alternative to Unity. I’m wasting my time if I’m only replacing the UnityBootstrapper with something “better”. Frankly, I don’t care how Prism configures its minimal requirements. If I want StructureMap (or AutoFaq, Castle Windsor, Ninject, etc), it is because I prefer that IoC for registering and configuring my own application components. I may replace the Prism configuration eventually but right now that is neither my motivation nor my first step.

Therefore, although my Cal.Bootstrapper is ugly as sin, it will serve me for the nonce as I hope it serves you and I shall proceed to describe it without further apology. I will let it configure Prism the ugly way using my IoC container in place of Unity after which I am free in my own code to drive my IoC container as it was meant to be driven.

Battle Plan

A Prism application has a Bootstrapper class to configure the container, create and configure the main UI view called the “shell”, acquire application modules, and launch the application.

You are expected to write a custom Bootstrapper that inherits from a base Bootstrapper class provided by Prism (the aforementioned UnityBootstrapper). This base class handles the above inventory of start-up tasks. Your derived Bootstrapper is supposed to extend the base class by overriding certain abstract and virtual methods.

In other words, the bootstrapper is launch karaoke. You add your voice at predefined moments. It’s great if the music suits you. If you’re singing off key, you’re should stop faking it and write your own song.

Tempted as I was, I chose not to quarrel with this approach. In fact, I took a deep breath and then embraced it … for the reasons discussed above.

I decided to replace the UnityBoostrapper with an alternative base class (Cal.Bootstrapper) that does not know about Unity.

Your derived Bootstrapper will provide an IoC container to this base class, wrapped in a vendor-neutral “ContainerAdapter, and delivered to the base class by overriding its abstract “CreateContainerAdapter” method.

I have a few additional goals:

- “Improve” the UnityBootstrapper so I can figure out what it does.

- Make it easy to update my base class when (if) Microsoft updates the UnityBootstrapper.

- Minimize the effort to get my existing app bootstrapper deriving fro the IoC-agnostic base class.

- Minimize the changes necessary to convert my existing Unity-based Prism app.

- “Prove it” with an app Prism-aficionados know: the Prism Reference Implementation.

The original UnityBootstrapper is code only a mother could love. Long methods; much repetition; mysterious names. I can’t replace it until I understand what it does. My first step is to refactor it for readability. This is somewhat a matter of taste but I hope you’ll agree that the my revision, called “RefinedUnityBootstrapper”, is an improvement.

I mustn’t get carried away if I am to satisfy my second subsidiary goal. Too many improvements would hinder re-synchronization with Microsoft’s changes.

I want to prove that my approach works on an “application” that anyone can examine and that would be familiar to Prism developers. The Prism RI is the obvious candidate; that’s what I tackled.

I haven’t looked at the RI in a long time. The version on my disk dates from February 2009. That’s pre-Silverlight 3 and, although I had patched it for SL 3, it’s still old and funky. The UI doesn’t work the way it should: stock positions don’t change when you buy and sell, the “Watch List” feature is broken, the Silverlight version throws a recoverable exception when you sell more stock than you own.

But I didn’t have time to freshen it up, it was good enough for my purposes, and it’s all I’m going to give you.

Swappable Versions

This is a tutorial. I want you to be able to switch among different container implementations without changing the body of the application. I don’t have the time or the interest to do anything fancy. In the “real” world, I’d make the change and discard the original.

The version of the RI you run depends entirely upon the executing version of Prism RI Bootstrapper class. The class is divided into two partial class files. One of them is constant. The other holds just the code that varies from container to container. The file with variations is called StockTraderRIBootstrapper.Ioc.Desktop or StockTraderRIBootstrapper.Ioc.Silverlight.

The variations are governed by compiler directives at the top. You uncomment ONE of them, recompile, and let ‘er rip. The example below shows the bootstrapper readied for StructureMap.

//***************************************************************************

// *** PICK YOUR BOOTSTRAPPER AND IOC CONTAINER WITH _ONE_ OF THESE DEFINES

//#define ORIGINAL_UNITYBOOTSTRAPPER

//#define REFINED_UNITYBOOTSTRAPPER

//#define UNITY_CONTAINER_ADAPTER

#define STRUCTUREMAP_CONTAINER_ADAPTER

//#define AUTOFAC_CONTAINER_ADAPTER

//***************************************************************************

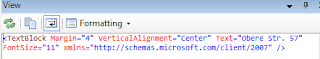

Each variation takes only a few lines. I set the title text so you can see which version is running on screen (the original three letter “CFI” company name changes to one of “OUB”, “RUB”, “UCA”, “SMA”, or “ACA” to match your selection). For the Ioc-agnostic variations, there is an override of the method that creates the selected Ioc ContainerAdapter. The two versions pinned to the UnityBootstrapper (remember that I “refined” the UnityBootstrapper first simply to understand it better) have a shim method instead.

Incredibly stupid, I know. I’d never ship anything like this. I live with it because it’s a throw away demo and doesn’t merit the effort or complexity of proper decoupling. Or at least it doesn’t merit that attention yet.

The rest of the Prism RI stays constant. It took some work to keep it that way. Not a lot, fortunately; the Patterns and Practices people did a pretty good job of keeping the RI Ioc-neutral.

I won’t bore you with the details of my journey to this blissful place. They are chronicled in “PrismRIChanges.txt”, located in the PrismRI folder next to the Visual Studio Solution file, “StockTraderRI-IoC.sln”. The gist of it is

- Add the Ioc assemblies in a common directory structure

- Add the PrismBootstrapper solution with its Ioc-agnostic Cal.Bootstrapper and the Ioc-specific ContainerAdapters.

- Revise the start-up project, StockTraderRI, to access the above

- Replace references to “IUnityContainer” with “IContainerAdapter”

- Remove module references to Unity.

You’ll do pretty much the same if you convert your existing Prism application to another Ioc container.

ContainerAdapters

The fun is in the ContainerAdapters which implement the following interface:

namespace Cal.Bootstrapper {

public interface IContainerAdapter {

IContainerAdapter RegisterInstance<T>(T instance);

IContainerAdapter RegisterType<TFrom, TTo>(

ContainerRegistrationScope scope) where TTo : TFrom;

IContainerAdapter RegisterType(

Type fromType, Type toType, ContainerRegistrationScope scope);

IContainerAdapter RegisterTypeIfMissing<TFrom, TTo>(

ContainerRegistrationScope scope) where TTo : TFrom;

IContainerAdapter RegisterTypeIfMissing(

Type fromType, Type toType, ContainerRegistrationScope scope);

T Resolve<T>() where T : class;

object Resolve(Type type);

T TryResolve<T>() where T : class ;

object TryResolve(Type type);

}

public enum ContainerRegistrationScope {

Singleton,

Instance,

}

}

Not so bad if you know your container. Clearly IContainerAdapter must be so dumb that any IoC Container vendor can support it. It needs to be just smart enough for the base Bootstrapper class to configure Prism. Fortunately, this challenge is easily met.

The code tends to be boilerplate. Autofac took a little more work (see below) but still weighs in at only 180 lines.

You also have to write an adaptor that implements Microsoft.Practices.ServiceLocation.IServiceLocator; virtually everyone has done this already.

IoC Containers Demo’d

I’ve tried this approach with three IoC Containers: Unity (Unity v.1.2.0.0, ObjectBuilder2 v.2.2.2.0), StructureMap (v.2.5.4.0), and Autofac (Desktop: v.1.4.5.676, Silverlight: 1.4.4.572).

You should know that I am not an expert in any of these containers. In fact, I just winged it, especially with StructureMap and Autofaq about which I know squat. I figured I could download them, skim whatever documentation they offered, and get something to work adequately for Shmoes like me.

It’s not quite that easy and I have low confidence in my use of these containers. Their authors are invited to lend a hand improving matters … after they’re done laughing.

I wanted to show at least one non-Unity container for both desktop and Silverlight applications. StructureMap doesn’t have a Silverlight version yet (come on, Jeremy). I tried Autofac because it does have a Silverlight version.

I’m glad I did. The StructureMap implementation was a breeze. Autofac was much harder. I’m not trashing Autofac. It just happened to be harder for me, partly due to my ignorance and mostly because of the position Autofac takes on resolving unregistered types.

Major Container “Gotcha”

Container differences matter! One pervasive Prism practice in particular relies on a Unity default behavior that other containers do not observe. It’s serious enough to influence your choice of containers or make your conversion substantially more difficult.

Prism instantiates a lot of its own components with unregistered public concrete classes. For example, somewhere in the bowels of RegionManager initialization, it resolves “DelayedRegionCreationBehavior”. This class is never registered with the container.

Unity is ok with this. When asked to resolve an unregistered public concrete type, it treats the type as if it had been registered and scoped as an instance (i.e., you get a fresh instance each time). Both TryResolve and Resolve behave this same way.

Prism components count on this default Unity container behavior. What if you change containers?

StructureMap has the same default behavior when resolving an unregistered public concrete type. However, it’s TryResolve equivalent, “TryGetInstance”, return null. As I write, I have not detected a consequence of this difference; but I know it’s waiting in the grass somewhere.

You could argue all day about whether a container should return null or return an instance the way Unity does. You could argue whether it should default to Singleton or Instance scope.

Autofac punts. If you attempt to Resolve an unregistered type, it throws an Autofac.ComponentNotRegisteredException with a clear message. “TryResolve” returns null, which is the sensible thing to do.

Aside: the three ContainerAdapter files in my sample implement a “ContainerPlay” class that reveals these differences.

I can’t quarrel with Autofac’s choice but I sure paid the price for purity. I had to hunt down all resolutions of concrete types and manually register them during AutofacContainerAdapter initialization. Unfortunately even that is insufficient. Many class constructors take parameters defined by concrete types. The container will attempt to resolve these and will throw at runtime. There aren’t integration tests to detect these automatically so I have to wander through the app under the debugger. I still haven’t got it to work.

It was easier to get the Prism RI working with StructureMap. I have assumed that the only critical difference is with explicit “TryResolve” calls. I haven’t encountered any optional constructor parameters so I expect StructureMap and Unity to resolve these in the same way.

I searched the Prism and RI source code for “TryResolve” calls involving concrete type. There weren’t many of them. I only found one of potential consequence. The base bootstrapper calls “TryResolve<RegionAdapterMappings>”. Fortunately, it also registers RegionAdapterMappings explicitly so resolution is always successful. Perhaps I was luck. Remember to be careful how you use TryResolve in your application.

The net of it is that containers differ. Prism should not have relied on a Unity-specific type resolution feature. If you have done so in your Unity-based application (as I have), you too are vulnerable.

Where Did The Tests Go?

I removed the test projects from the Prism RI and the Composite Application Library projects that go with it. Why did I do that? laziness?

I really thought this simple task would take maybe a long day at most. Stripping the tests away from the start was supposed to help me stay in the time box. It was going to be non-trivial to setup Prism’s test environment, convert the tests, and repackage for distribution to you. I didn’t think I could afford it.

The point of the exercise was to demonstrate how to liberate your application to use an alternative container. I wasn’t trying to deliver a product and there is limited value in tests for a version of the Prism RI that is (a) obsolete and (b) irrelevant to your application.

Unfortunately, this “simple” task kept expanding in scope; it took four long days. Geez like that never happens.

Now that I’m “done” I wish I had those tests back, the UnityBootstrapperFixture in particular. Debugger testing is painful as we all know. Had I committed to automated tests, I would have written something better than the idiotic compiler-directive-based approach to swapping containers. Sure it would have cost me something – a day? – to get it right, a day I didn’t want to spend. But if someone wants to take this further, the tests would accelerate his progress greatly and imbued his efforts with confidence. Mea culpa.

I wonder if some kind soul will restore them?

Happy New Year, everyone!